AIoT: The Fusion That’s Redefining Our Connected World (or) Beyond Connectivity: How AIoT is Making the Internet of Things Truly Intelligent

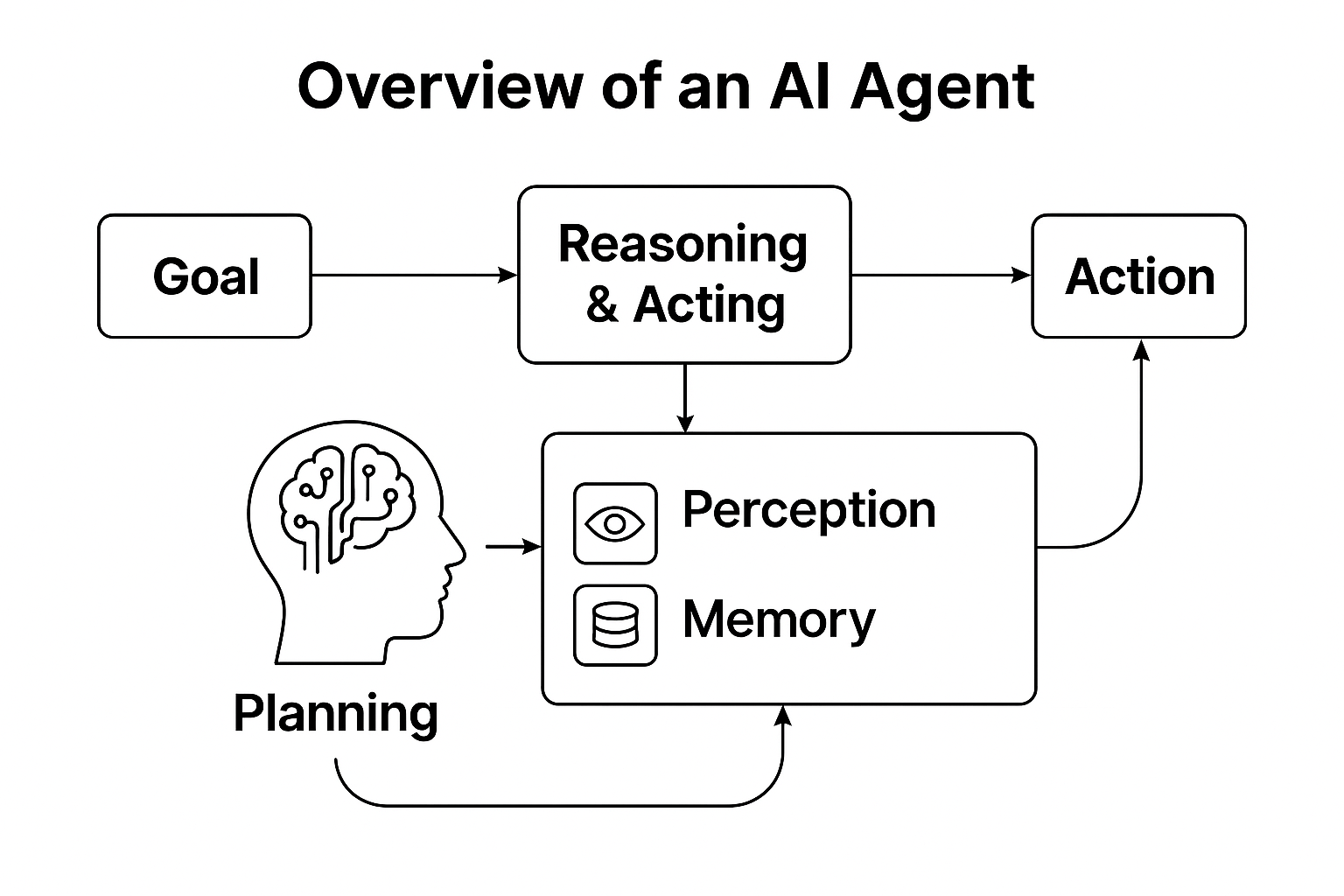

🤖 AIoT: The Fusion That’s Redefining Our World Artificial Intelligence (AI) and the Internet of Things (IoT) were once considered […]